Evaluation of 7 programs all lapsing at the same time

Grosvenor was engaged to conduct the evaluation of all seven programs and bring efficiencies as the one evaluation provider conducting all seven evaluations.

Grosvenor was engaged to conduct the evaluation of all seven programs and bring efficiencies as the one evaluation provider conducting all seven evaluations.

Grosvenor was engaged by a Victorian Government regulator to develop a MER Framework for the regulator’s five-year strategy.

Grosvenor was engaged to undertake the mid-term evaluation of the Plan, focusing on assessing the extent to which the Plan’s rationale remained relevant, and the progress in the delivery of programs.

Our client engaged us to evaluate three separate but interlinked programs, all funding grants designed to assist organisations in acquiring the new technology and enabling infrastructure.

This kit was produced to give Program Managers an easy to read and understand guide. We explain the principles while walking you through an example program that demonstrates how to apply the techniques in practice

Building evaluation culture is all about shaping the way an organisation thinks and talks about evaluation as a key component to improvement and innovation. Our evaluation maturity model has 10 segments we believe each organization needs to focus on to uplift overall evaluation capability and maturity. The model can be used as a guide in identifying the priority areas to focus on in building evaluation culture and maturity.

Use this guide to kick start the development of your M&E framework.

But for many of us, the art of program evaluation can seem a bit of a mystery. What is it and what is it for? More importantly, how do I do it well?

The Evaluation Planning Checklist serves as a practical tool that summarises and organises the various aspects that should be considered when establishing a sound and structured program evaluation.

An easy to use, interactive, fill it in as you go, template to help you develop your M&E Framework.

Being asked to manage a new, or existing, program can seem overwhelming. To help you navigate the world of program management, Grosvenor Public Sector Advisory has developed a series of articles to break down the key activities and tasks you can implement to make your life easier.

Program evaluation can be confusing. Not only are there different types of program evaluation, but different people use different terms to mean different things – or the same thing! (As if there wasn’t enough confusion already!)

The way evaluators develop, test and present recommendations has a huge impact on how improvements will finally manifest (if at all). Good recommendations and improvement opportunities identified during an evaluation are those that can be put into action. To maximise the impact and value of each evaluation, it is critical that program managers aren’t left asking ‘what next’ once the final report is delivered.

Regardless of whether you’ve just inherited a program, established something new or are trying to work out where your program fits in this new environment, we’re here to help you break it down.

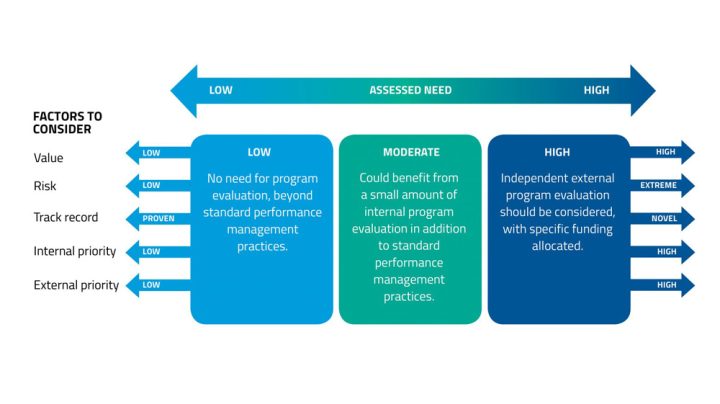

Monitoring and managing the performance of a program is an integral part of program management. This blog helps you identify the factors you need to consider when developing a performance management framework.

It is very easy to say you need to ‘collect data from a sample’, but what does this really mean? How many people should you be engaging with to collect something meaningful? Question no more! We’ve put together some quick and easy ‘rules of engagement’ to help you understand what your sample size needs to be.

In the ideal evaluation world, an evaluation occurs in the context of a beautifully planned and executed monitoring and evaluation framework. You have time and resources and oodles of beautiful, relevant, high quality data to inform your evaluation…… But who lives in this ideal world? Oftentimes, you are notified late of the requirement, there’s a tight budget and timeframe, and for the final kicker…. you have no data.

Most people developing their program logics and program theory focus almost exclusively on how the targeted outcomes can be achieved.

How will decisions get made? Who is ultimately responsible? There’s a lot to think about. Fortunately, there are multiple things you can do to simplify and bring clarity to this process, ensuring that your decision making is both efficient and effective (it’s a win-win!).

Improvement opportunities are only useful if you know what to do with them – both evaluators and program managers must have clarity around how each recommendation and improvement can, or should, be implemented.

An integral part of understanding how well your program has performed is having a monitoring and evaluation framework (M&E framework) and applying it to the specific context of your program.

Grosvenor has developed a capability framework that can be used to review and assess the evaluative culture of any organisation, learn how you can benefit using our capability model by downloading our guide.

The usual report-writing mantra of keeping the audience in mind applies equally to program evaluation reports. The problem is that the intended audience for program evaluation reports is often very wide.

This template is intended to capture the evaluation requirements across an organisation each year or over a period of years.

Poorly designed Program Evaluation questionnaires can lead to wasted time and erode stakeholder confidence. Worse, they can result in faulty data, which can lead to flawed decision-making and have serious impacts on the outcome of a program. This guide will help you appraise your evaluation questionnaires and ensure you’re collecting the right data to truly measure the success of your program.

This tool is intended for: ‘unpacking’ program objectives or outcomes and determining the data requirements for monitoring and evaluation of the program against its outcomes. The tool can be used to focus on just one, or both, of the aims described above.

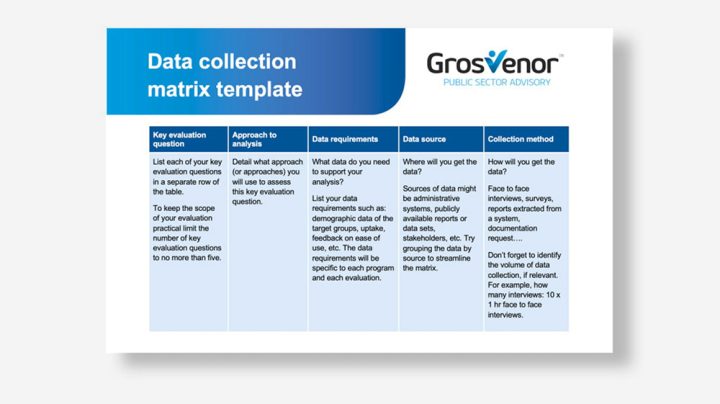

Use this data collection matrix to capture your data collection requirements for your next evaluation. You can use this template as a stand-alone tool or as part of an evaluation plan.

Program evaluation can be daunting for program managers approaching it for the first time. Rather than being given clear guidance on the how-to’s of program evaluation, newcomers are often expected to deduce program evaluation techniques from dry academic texts hidden behind a wall of baffling jargon.

While program evaluation might seem a little scary, it actually presents a raft of exciting opportunities for program managers to showcase the successes of their programs and ensure that their teams are performing to its full potential

The checklist contained in this paper serves as a practical tool that summarises and organises the various aspects that should be considered when establishing a sound and structured program evaluation.

Announcing an evaluation requirement generates two kinds of responses: enthusiastic, embracing the opportunity to recognise achievements or resistant and full of excuses

By their nature, program evaluations seek to determine if a program is working. Which means that, in reality, the answers are not always going to be positive.

It is no secret that social programs are all about the community – whether designing, delivering or evaluating a program we’re all working to improve the lives of, or outcomes for, populations in a particular area or sector. In recognition of this, it is critical that the views of program stakeholders are captured at all stages of the program lifecycle.

It’s easy to fall into the trap of thinking the evaluators’ job is over once the final report is submitted. In terms of the adoption of improvement opportunities and application of findings, this is basically akin to an evaluator saying “Over to you now! Good luck!”. The evaluator’s job is not over once the final report is submitted!

AI Evaluation Expert

AI Evaluation Expert

BA, MBA, PRINCE2, IPAA, CIPS, AICD

Kristy is one of Grosvenor’s most experienced public sector advisors, with over a decade of experience advising clients on complex program evaluation, organisational review and procurement projects.

Strategy and Transformation

Strategy and Transformation

Master of Arts in Organisational Psychology, University of Witwatersrand, Prince 2 Project Management (Foundation)

Sarika has comprehensive expertise across organisational transformation elements specialising in strategy, operating models, organisational design and development, business process re-engineering and change management. She has led numerous strategy and culture sessions and governance initiatives.

Bachelor of Social Science (Psychology)

Sophie is an experienced project manager and has supported transformative future-proofing initiatives. Sophie strives to deliver positive impact for our clients by applying a creative and innovative approach to solve problems.

Bachelor of Educational Studies (BEdSt) Master of Business Administration (MBA)

Rachel is passionate about developing efficient processes to support ongoing business success. She enjoys helping organisations to understand and enhance their effectiveness and efficiency across a range of business practices.

Not sure how to monitor your program’s performance?

Don’t know where to start to judge whether your program is on track?

A monitoring and evaluation framework is an integral part of understanding how well your program is performing.

It helps you set out and record the activities you need to complete to assess your program’s performance over time to assess whether your program is on the right track.

We created this M&E framework guide to help you build your own. The guide gives you the right structure and useful explanation of what is typically required in each section

Download our comprehensive guide to build an M&E framework for your own program today.

Whether you are looking to get more out of your projects, are interested in collaboration or would like to talk about joining the Grosvenor team, please get in touch.